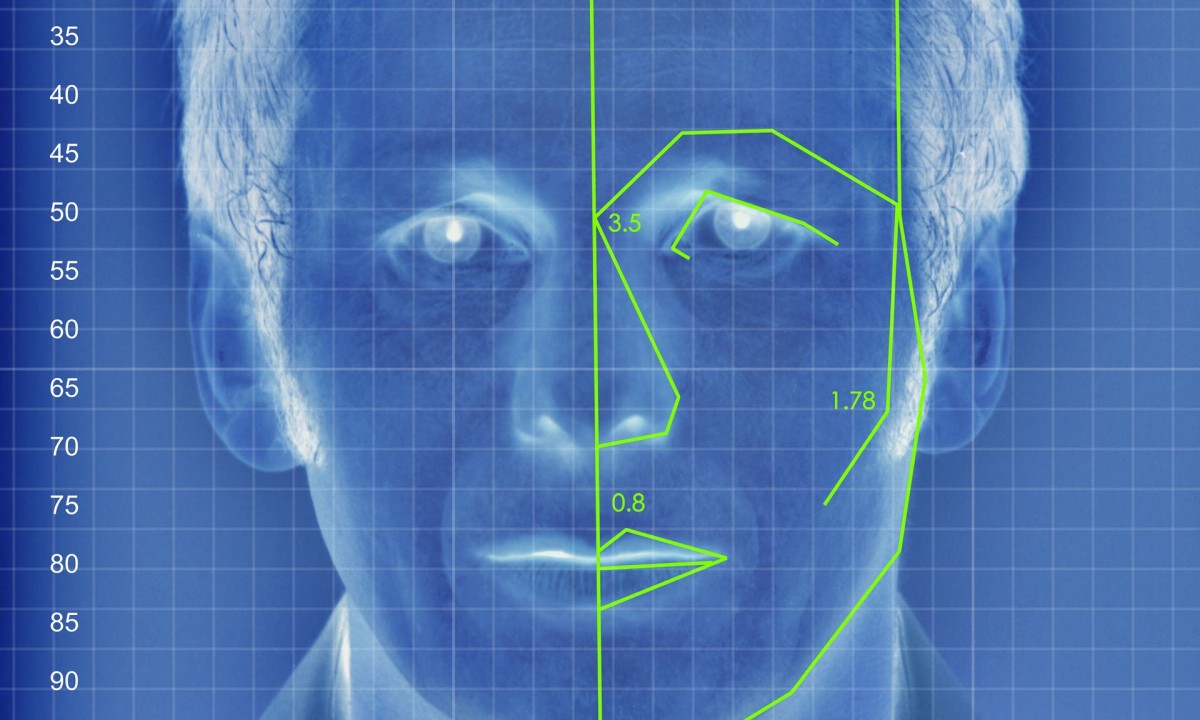

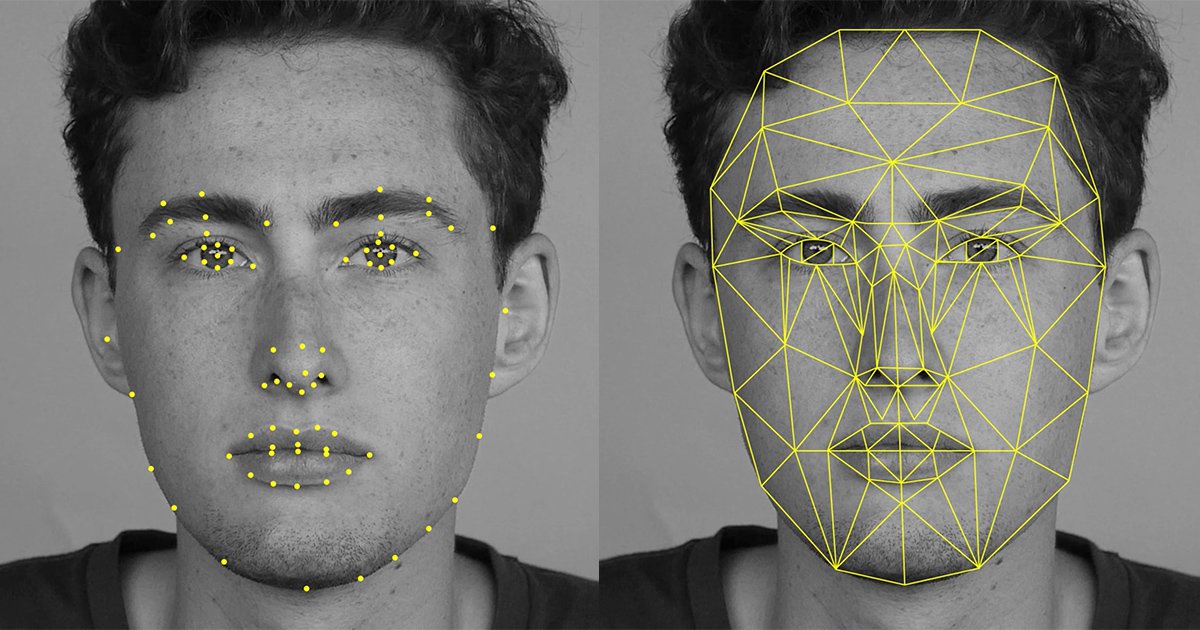

Approximately half of adult Americans’ photographs are stored in facial recognition databases that can be accessed by the FBI, without their knowledge or consent, in the hunt for suspected criminals. About 80% of photos in the FBI’s network are non-criminal entries, including pictures from driver’s licenses and passports. The algorithms used to identify matches are inaccurate about 15% of the time, and are more likely to misidentify black people than white people.

These are just some of the damning facts presented at last week’s House oversight committee hearing, where politicians and privacy campaigners criticized the FBI and called for stricter regulation of facial recognition technology at a time when it is creeping into law enforcement and business.

“Facial recognition technology is a powerful tool law enforcement can use to protect people, their property, our borders, and our nation,” said the committee chair, Jason Chaffetz, adding that in the private sector it can be used to protect financial transactions and prevent fraud or identity theft.

“But it can also be used by bad actors to harass or stalk individuals. It can be used in a way that chills free speech and free association by targeting people attending certain political meetings, protests, churches, or other types of places in the public.”

Furthermore, the rise of real-time face recognition technology that allows surveillance and body cameras to scan the faces of people walking down the street was, according to Chaffetz, “most concerning”.

“For those reasons and others, we must conduct proper oversight of this emerging technology,” he said.

“No federal law controls this technology, no court decision limits it. This technology is not under control,” said Alvaro Bedoya, executive director of the Center on Privacy and Technology at Georgetown Law.

The FBI first launched its advanced biometric database, Next Generation Identification, in 2010, augmenting the old fingerprint database with further capabilities including facial recognition. The bureau did not inform the public about its newfound capabilities nor did it publish a privacy impact assessment, required by law, for five years.

Unlike with the collection of fingerprints and DNA, which is done following an arrest, photos of innocent civilians are being collected proactively. The FBI made arrangements with 18 different states to gain access to their databases of driver’s license photos.

“I’m frankly appalled,” said Paul Mitchell, a congressman for Michigan. “I wasn’t informed when my driver’s license was renewed my photograph was going to be in a repository that could be searched by law enforcement across the country.”

Last year, the US Government Accountability Office (GAO) analyzed the FBI’s use of facial recognition technology and found it to be lacking in accountability, accuracy and oversight, and made recommendations of how to address the problem.

A key concern was how the FBI measured the accuracy of its system, particularly the fact that it does not test for false positives nor for racial bias.

“It doesn’t know how often the system incorrectly identifies the wrong subject,” explained the GAO’s Diana Maurer. “Innocent people could bear the burden of being falsely accused, including the implication of having federal investigators turn up at their home or business.”

Inaccurate matching disproportionately affects people of color, according to studies. Not only are algorithms less accurate at identifying black faces, but African Americans are disproportionately subjected to police facial recognition.

“If you are black, you are more likely to be subjected to this technology, and the technology is more likely to be wrong,” said Elijah Cummings, a congressman for Maryland, who called for the FBI to test its technology for racial bias – something the FBI claims is unnecessary because the system is “race-blind”.

“This response is very troubling. Rather than conducting testing that would show whether or not these concerns have merit, the FBI chooses to ignore growing evidence that the technology has a disproportionate impact on African Americans,” Cummings said.

Kimberly Del Greco, the FBI’s deputy assistant director of criminal justice information, said that the FBI’s facial recognition system had “enhanced the ability to solve crime” and emphasized that the system was not used to positively identify suspects, but to generate “investigative leads”.

Even the companies that develop facial recognition technology believe it needs to be more tightly controlled. Brian Brackeen, CEO of Kairos, told the Guardian he was “not comfortable” with the lack of regulation. Kairos helps movie studios and ad agencies study the emotional response to their content and provides facial recognition in theme parks to allow people to find and buy photos of themselves.

Brackeen said that the algorithms used in the commercial space are “five years ahead” of what the FBI is doing, and are much more accurate.

“There has got to be privacy protections for the individual,” he said.

There should be strict rules about how private companies can work with the government, said Brackeen, particularly when companies like Kairos are gathering rich datasets of faces. Kairos refuses to work with the government over concerns about how his technology could be used for biometric surveillance.

“Right now the only thing preventing Kairos from working with the government is me,” he said.

3 WAYS TO SHOW YOUR SUPPORT

- Log in to post comments